Edgar Cervantes / Android Authority

TL;DR

- Among major AI news-summary systems, Google Gemini performed the worst, showing significant issues in many results.

- Gemini struggled with identifying reliable sources, providing quotes, and linking to its source material.

- While everyone’s tools are showing signs of improvement, Gemini still lags behind.

You cannot conduct a conversation about AI without someone quickly bringing up the inconvenient topic of mistakes. For as useful as these systems can be when it comes to organizing information, and as impressive as the content is that generative AI can seemingly pull out of nowhere, we don’t have to look far before we start noticing all the blemishes in this otherwise polished facade. While there’s definitely been progress since the bad old days of Google AI Overviews hallucinating utter nonsense, just how far have things really come? Some new research is taking a rather concerning look into just that.

Don’t want to miss the best from Android Authority?

The European Broadcasting Union (EBU) and BBC were interested in quantifying the performance of systems like OpenAI ChatGPT, Google Gemini, Microsoft Copilot, and Perplexity when it comes to delivering AI-generated news summaries, especially with 15% of under-25-year-olds relying on AI for their news. The BBC initially performed both a broad survey, as well as a series of six focus groups, all gathering data about our experiences with and opinions of these AI systems. That approach was later expanded for the EBU’s international analysis.

Looking at beliefs and expectations, some 42% of UK adults involved in this research reported that they trusted AI accuracy, with the number growing in younger age groups. They also claim to be very concerned with accuracy, and 84% say that factual errors would significantly impair that trust. While that may sound like an appropriately cautious approach, just how much of this content is really inaccurate — and are people noticing?

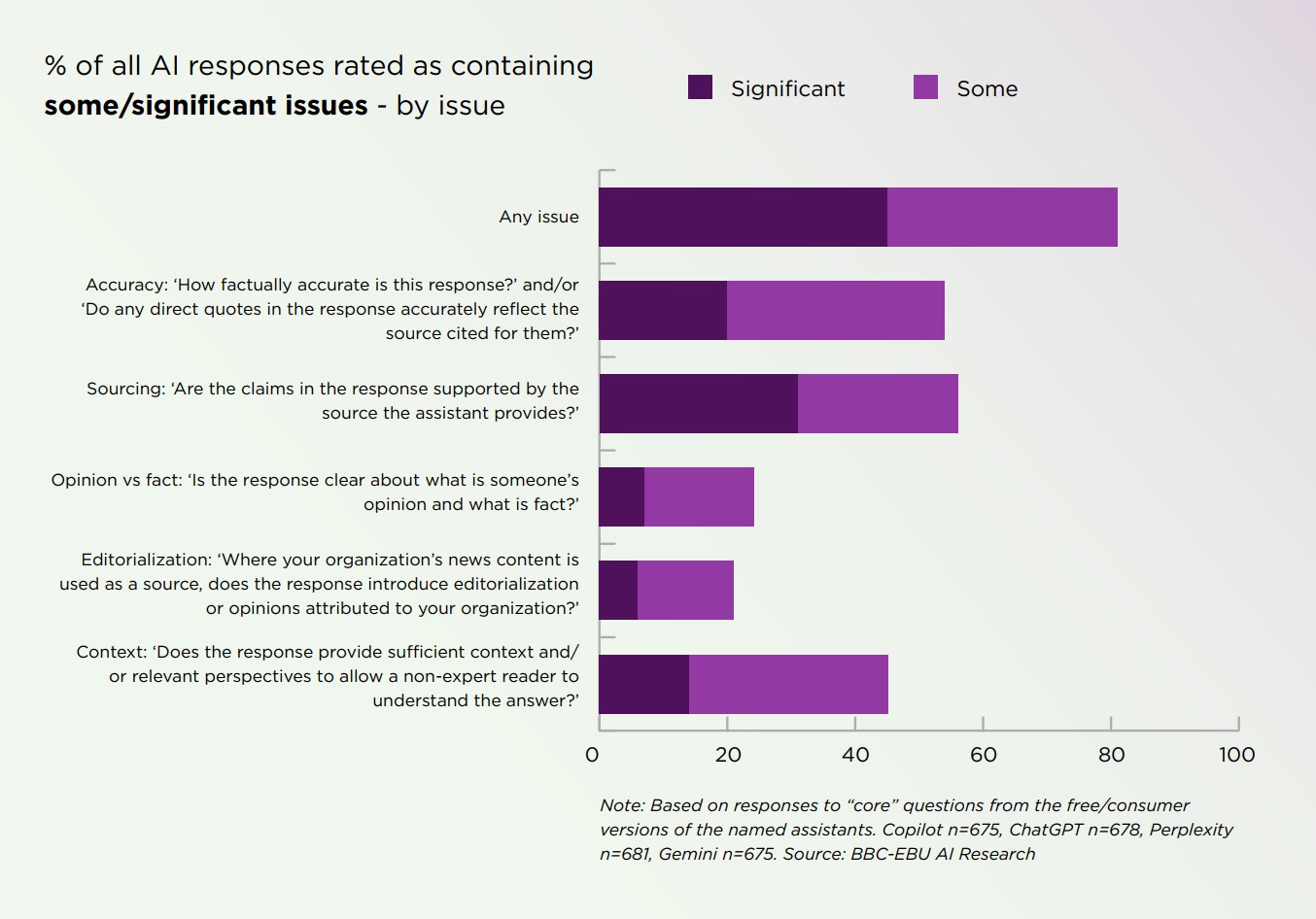

Based on the results, we’d have to largely guess “no,” as the majority of AI response were found to have some problem with them:

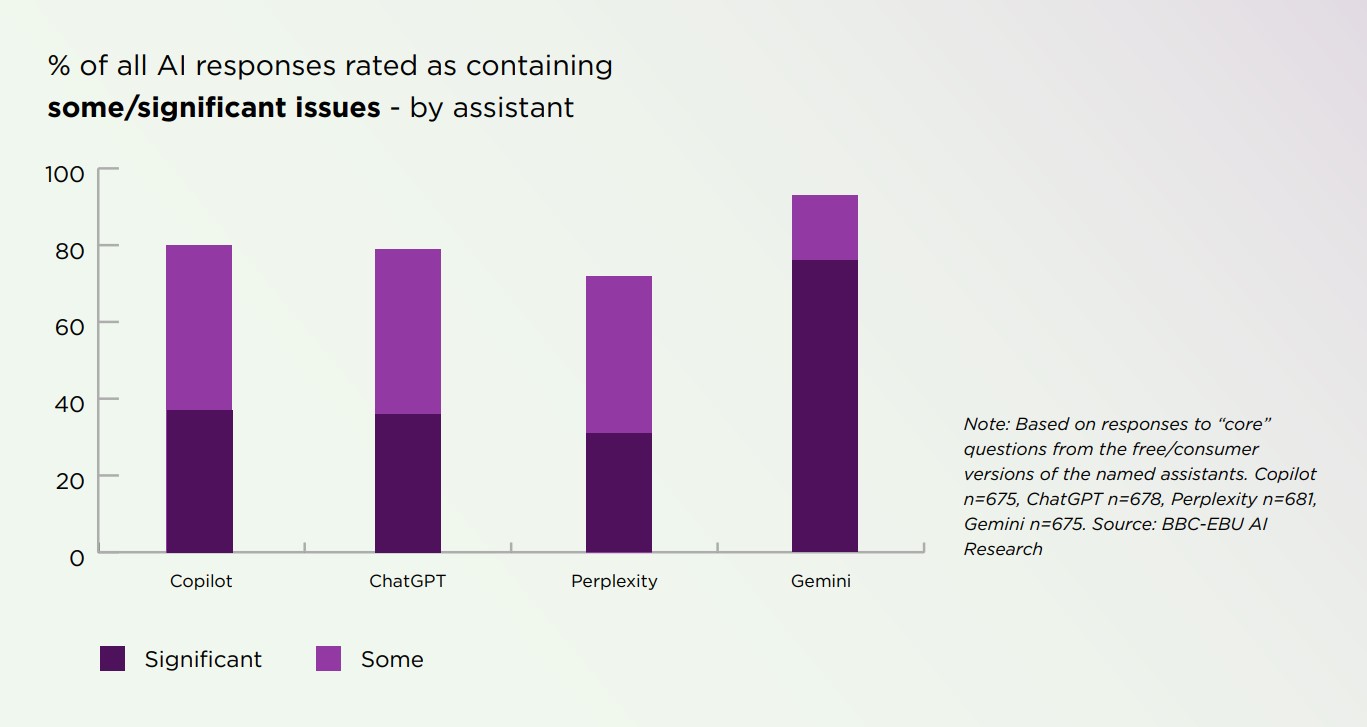

None of the models evaluated performed great, and most were in the same ballpark when it came to how they did in these tests. But then there’s Gemini, which is just a pronounced outlier, both in terms of total issues — and much more concerningly, those deemed to be of significant consequence:

What is Gemini doing so poorly? Among the problems the researchers highlight are a lack of clear links to source materials, failure to distinguish between reliable sources and satirical content, over-reliance on Wikipedia, failure to establish relevant context, and the butchering of direct quotations.

Across the six months between when the two main data sets this study relies on were gathered, these AI systems evolved, and by the end were showing fewer issues with news summaries than they had at the beginning. That’s great to hear, and Gemini in particular saw some of the biggest gains when it came to accuracy. But even with those improvements, Gemini is still showing far more significant issues with its summaries than its peers.

The full EBU report is definitely worth a read if you’ve got even a passing interest in our relationship with AI-processed news. If it’s not enough to have you seriously reconsidering the level of trust you place in these systems, you probably need to read it more closely.

We’ve reached out to Google to see if the company has any comment on the methods or results shared here, and will update you with anything we hear back.

Thank you for being part of our community. Read our Comment Policy before posting.