A few days ago, we looked into how Apple could one day use brain wave sensors in AirPods to measure sleep quality and even detect seizures.

Now, a new paper shows how the company is exploring deeper cardiac health insights with the help of AI. Here are the details.

A bit of context

With watchOS 26, Apple introduced Hypertension notifications on the Apple Watch.

As the company explains it:

Hypertension notifications on Apple Watch use data from the optical heart sensor to analyze how a user’s blood vessels respond to the beats of the heart. The algorithm works passively in the background reviewing data over 30-day periods, and will notify users if it detects consistent signs of hypertension.

While this feature is far from a medical-grade diagnosis tool, and Apple is the first to acknowledge that “hypertension notifications will not detect all instances of hypertension,” the company also claims the feature is expected “to notify over 1 million people with undiagnosed hypertension within the first year”.

One important aspect of this feature is that it is not based on instant measurements, but rather on data over 30-day periods, which means that its algorithms analyze trends, rather than producing real-time hemodynamic readings or estimating specific cardiovascular parameters.

And that’s precisely where this new Apple study comes in.

Getting more data from the optical sensor

One thing that’s important to make clear from the start: at no point in this study is the Apple Watch mentioned, nor are there any claims about upcoming products or features.

This study, like most (if not all) studies that come out of Apple’s Machine Learning Research blog, focuses on foundational research and on the technology itself.

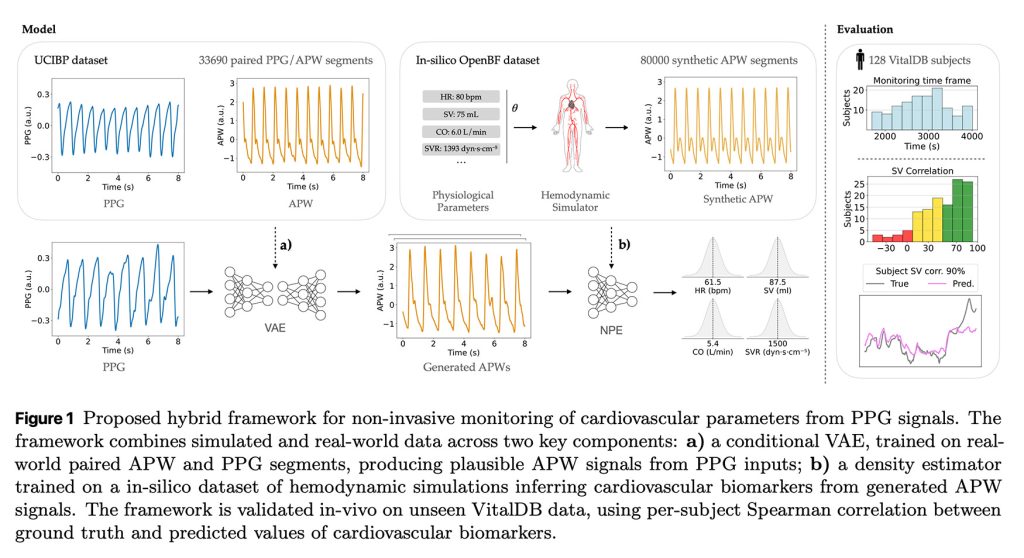

In this particular paper, called Hybrid Modeling of Photoplethysmography for Non-Invasive Monitoring of Cardiovascular Parameters, Apple proposes “a hybrid approach that uses hemodynamic simulations and unlabeled clinical data to estimate cardiovascular biomarkers directly from PPG signals.”

In other words, the researchers demonstrate that it is possible to estimate deeper cardiac metrics using a simple finger pulse sensor, also known as a photoplethysmograph (PPG), the same optical sensing modality used in the Apple Watch (though with different signal characteristics).

What the Apple researchers did was get a large dataset of labeled simulated arterial pressure waveforms (APWs), and a dataset of simultaneous real-world APW and PPG measurements.

Next, they essentially trained a generative model to learn how to map the PPG data to the APW occurring simultaneously.

This allowed them, in a nutshell, to infer APW data from PPG measurements with sufficient precision for the purposes of the study.

After that, they fed those interpreted APWs into a second model, which was trained to infer cardiac biomarkers, such as stroke volume and cardiac output, from that data.

They achieved this by training this second model with simulated APW data, paired with known cardiovascular parameter values for stroke volume, cardiac output, and other metrics.

Finally, they generated multiple plausible APW waveforms for each PPG segment, inferred the corresponding cardiovascular parameters for each one, and averaged those results to produce a final estimate along with an uncertainty measure.

The results

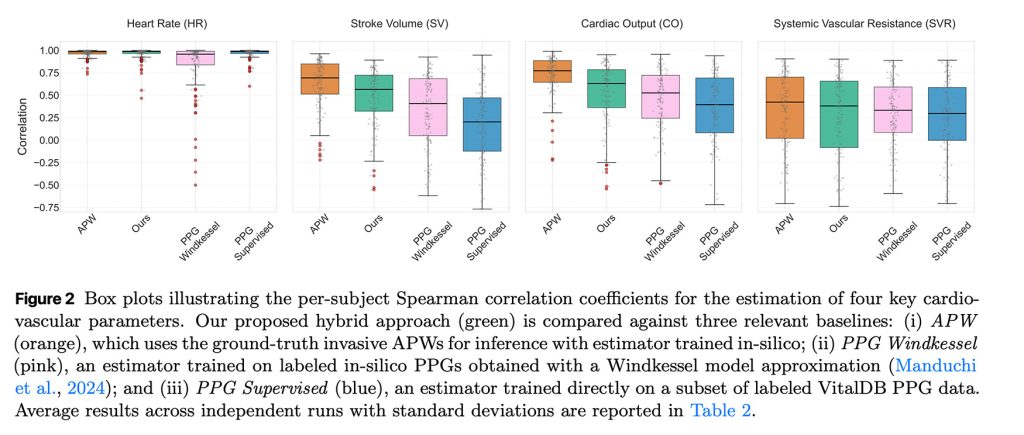

Once the entire training process and model pipeline were in place, they picked an entirely new dataset “comprising APW and PPG signals from 128 patients undergoing non-cardiac surgery, labeled with cardiovascular biomarkers.”

After running this data through the pipeline, they saw that it accurately tracked stroke volume and cardiac output trends, though not their exact absolute values.

Still, their method outperformed conventional techniques, showing that AI-assisted modeling can extract more meaningful heart insights from a simple optical sensor.

Here’s the researchers’ conclusion in their own words:

In this work we use a hybrid modeling approach to infer cardiovascular parameters from in-vivo PPG signals. Compared to purely data-driven approaches that struggle due to limited labeled data, our method achieves promising results by incorporating simulations and sidestepping the need for invasive and costly annotations. While other existing hybrid approaches for cardiovascular modeling either embed physical properties as structural constraints within neural networks or augment traditional physiological models with data-driven components, our method incorporates physical knowledge in the model through SBI. (…) Our results contribute to characterizing the informativeness of PPG signals for predicting cardiac biomarkers, and could extend beyond the ones considered in our experiments. While our results are promising in monitoring temporal trends, absolute value prediction of complex biomarkers remains challenging, and is a key direction for future work. Future work may also explore alternative generative approaches for the PPG-to-APW mapping, or investigate different architectural choices. Finally, a similar learning strategy than the one used here for finger PPG could extend to other modalities, including wearable PPG, and open the door to passive and long-term cardiac biomarker monitoring.

While it is impossible to know whether Apple will ever incorporate these features into the Apple Watch, it is encouraging to see that the company’s researchers are looking for novel ways to extract even more meaningful and potentially life-saving data from sensors that are already in use.

You can find the full study on arXiv.

Great Apple deals on Amazon

FTC: We use income earning auto affiliate links. More.