Summary:

AI chat and search lets users describe needs without exact keywords, but many don’t know AI’s full capabilities or how to prompt effectively.

AI chatbots and AI-driven search can help people find what they’re looking for — even if they don’t know what they’re looking for. In this way, generative AI tools can greatly alleviate a longstanding major pain point of a search-driven web. However, AI’s ability to help with this problem is still limited. In particular, many consumers are simply not aware of how powerful generative AI tools can be in information-seeking, and don’t know how to prompt to achieve better outcomes.

Keyword Foraging in Traditional Search

As one of our participants in recent qualitative studies on AI told us, “You don’t know what you don’t know.”

That’s an eternal challenge when humans seek to answer a question or learn about a topic. With traditional web search, users are severely limited by their ability to articulate their need. They have to know what they’re looking for in order to provide keywords to a search engine.

This challenge leads to a user behavior I’ve named keyword foraging — the search before the search.

When keyword foraging, a user conducts a preliminary search (usually in a web search engine like Google) to determine the right keywords for their information need.

For example, a shopper may want to buy that Y-shaped peeler that bartenders use to remove strips of citrus peel for cocktails but doesn’t know it’s called a “channel knife.” Before she can find the term that will help her get what she wants, the user will need to do some awkward failing through search engines and websites.

AI Streamlines Simple Keyword Foraging

Because generative AI chats and AI-powered search engines can accept wordy, complex questions, keywords are less of a barrier than they used to be. The ability to express an information need in full sentences, without proper terminology, is extremely powerful.

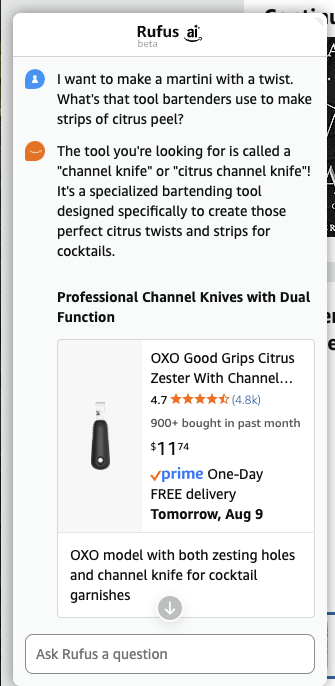

In the channel-knife example, the shopper can simply explain her goal and describe the thing she wants — it doesn’t matter if she doesn’t know the actual term.

For simple situations like this one, where the user knows what they need but does not know the correct keyword, generative AI greatly facilitates keyword foraging.

However, keyword foraging still represents an extra initial step before the user can search for channel knives to purchase (her actual goal). You’d expect Google’s AI Mode to pull in some options from Google Shopping in this instance, but it failed to.

In contrast, when I gave the same prompt to Amazon’s Rufus (its AI-powered shopping assistant), Rufus provided the correct term and some links to purchase options.

Articulation Challenges in Searching and Prompting: An Example from User Testing

It can be annoying and tedious to look for the right name of the thing you want to search for. But it’s even more difficult when you don’t know or can’t explain what you need in the first place. This situation goes beyond simple keyword foraging — before you can even begin to forage for keywords, you need to explain the problem and identify the potential solution .

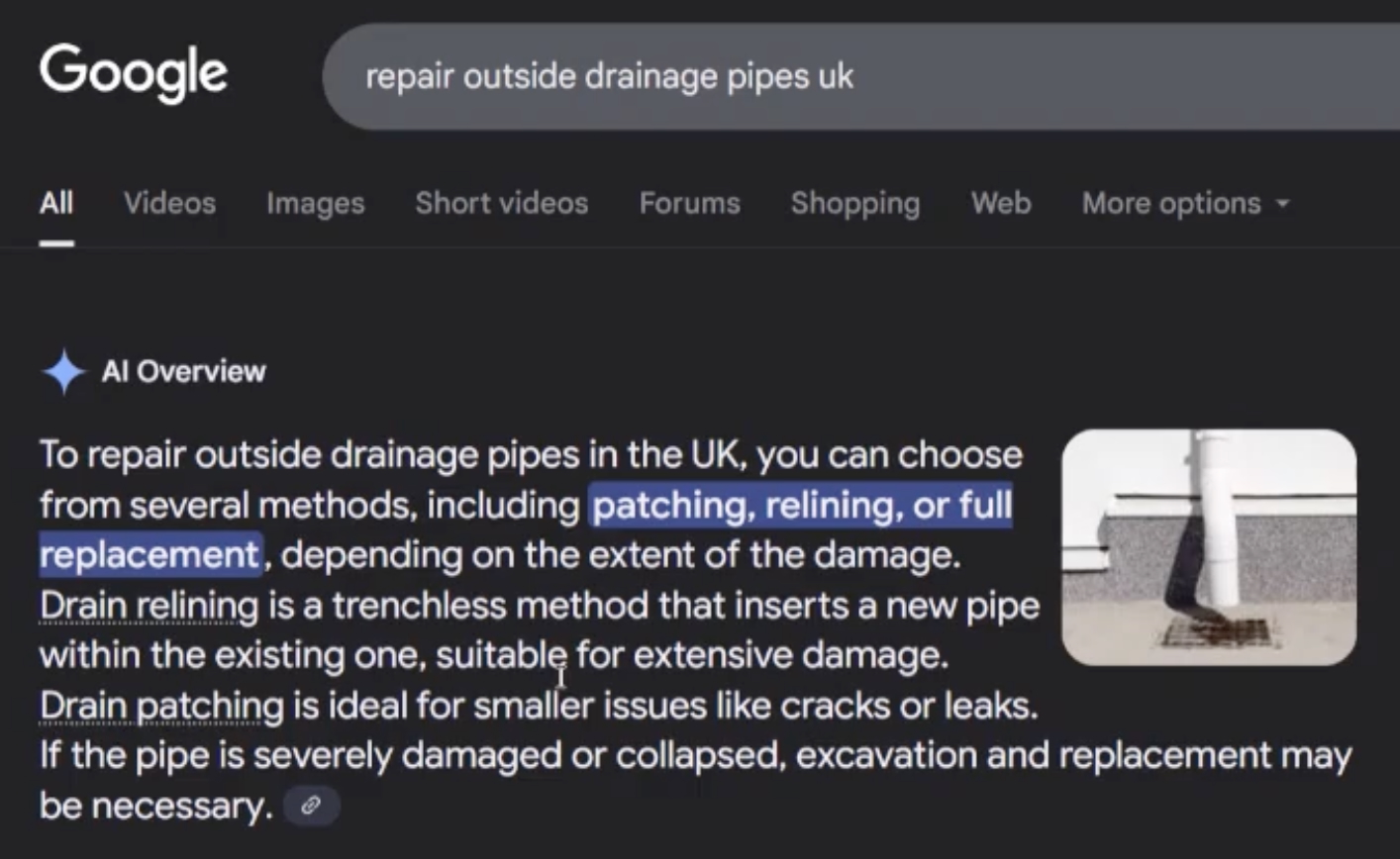

For example, one participant in our recent study had noticed that a drainage pipe outside his home was leaking and wanted to fix it by himself. He faced several challenges with this information need: to find a repair video, he needed to search for the right terms, but he had no plumbing vocabulary and no clear diagnosis of his issue.

He started at classic Google (his “search engine of choice”) and tried a traditional search query: repair outside drainage pipes uk. He immediately checked the resulting AI overview but was disappointed. He noticed the word “trenchless” and realized that the system was not accurately understanding his situation — it was advising on repairing underground wastewater pipes, while he was dealing with an above-ground pipe problem. “It’s going off in the wrong direction,” he told us.

He reflected that he probably wasn’t accurately describing the problem in his query, saying:

“The terms in my prompt might be wrong. I need to change my terms.”

He tried three iterations of his search query but kept getting similar results.

repair outside drainage pipes uk

repair outside drainage pipes fixed to exterior wall uk

repair outside waste water pipes fixed to exterior wall uk

“At this point, I’m getting quite frustrated,” he said. He proceeded to visit websites, including Reddit and YouTube, vainly looking for information scent to indicate he was moving in the right direction. After multiple disappointments over nearly 15 minutes, he told the facilitator that he might give up and just call a plumber to fix the problem.

The facilitator asked him to attempt his task with Gemini. This was the user’s first time using AI chat for something other than help writing emails and documents. His first prompt simply rephrased his previous queries in question format:

how do i fix leaking outside waste water drain pipes in the uk

He reviewed the prompt before submitting it, commenting, “I’ve learned to be more careful with my wording.”

He could have provided the additional details he’d mentioned to the facilitator, such as the pipe’s exact location outside a window, or the fact that it leaked when people used the shower. He could have explained that he was new to DIY plumbing projects, and was very unfamiliar with the space. This is a major advantage of AI chat vs. traditional search — even if you don’t have the right keywords, you can at least use more words to describe the concept. You can even communicate your own knowledge level, so you receive information you can understand. But this participant didn’t provide these details, likely because of his limited prompting experience.

The imprecise prompt resulted in a very long Gemini response that included both relevant and irrelevant information.

“There’s a lot of information here that isn’t specific to how to actually fix it.”

He scanned through the text, pulling out the details that were most useful to his problem. While that sifting work was tedious, he did finally find some advice relevant to his situation.

In a followup prompt, he asked for guidance on how to perform the repair himself and requested a demo video. Gemini’s responses were longer and more conversational than those in Google AI Mode, and they helped him work through his articulation challenge.

He finally found the step-by-step instructions he was looking for Gemini also provided search-keyword suggestions to help him find relevant YouTube videos by himself — directly providing the language he’d been missing.

How to fix leaking PVC drain pipe

Self-fusing silicone tape pipe repair

Epoxy putty pipe repair

Pipe repair bandage leak fix

He was happy with this response, particularly because it provided the relevant terminology he was missing.

“That’s why I find it helpful because I can’t conjure up these terms in my head.

It’s quite hard to find the right word. You know a problem, but you don’t know the best way to describe that problem when you’re a novice. Maybe if you’re a plumber, you know things like ‘PVC’… I didn’t know anything about ‘self-fusing silicon tape’ or ‘epoxy putty.’ All those terms are alien to me. So I’m using terms like ‘drainage pipes’ and not getting the output I want or the thing I need. It seems to me like Gemini has understood a bit more about what I need.”

Where Gemini Failed: A Discoverability Barrier

This participant was happy with Gemini’s output. He felt he received some useful information that pointed him in the direction of a solution, and he likely wouldn’t have been able to achieve that through traditional search.

However, this participant’s task could’ve been much easier — but he didn’t know that.

In this situation, Gemini should have met the user where he was by:

- Asking followup questions to narrow down the issue in the beginning (instead of launching into a long exposition about leaks)

- Presenting only the relevant solution(s) for his problem (instead of giving him long descriptions of multiple possible solutions, which he had to sift through)

Providing the user with links to related YouTube videos (instead of giving him keywords to search for videos himself)

I’m guessing that many AI superfans would put the blame on the user here — he could have simply provided more contextual information from the beginning, or used a followup prompt to explicitly ask Gemini to find those videos for him. But this participant had no way of knowing those were possibilities. (And of course, in UX, we believe the product should adapt to the user — not the other way around.)

So while this user was able to work with Gemini to overcome his articulation barrier, there was another serious obstacle at play: a discoverability barrier.

The opaque, nebulous nature of generative AI makes it incredibility difficult for users to discover, explore, and understand its full range of possible uses, and how to achieve those outcomes.

Unfortunately, these systems are still very much designed for nerds like me who are happy to spend ample time learning about AI, reading documentation, watching demos, and following AI update news — all of which informs my mental model and understanding of how to use AI. But that isn’t realistic behavior to expect from most users. As a result, consumers are struggling to do more than scratch the surface of AI’s full information-seeking potential.

AI Doesn’t Yet Eliminate Information-Seeking Challenges

While generative AI tools significantly help users overcome keyword foraging and knowledge gap problems when they search for information, they don’t eliminate those challenges completely.

People still need help naming what they want or the problem they’re facing. Our participants were largely leaning on slightly more verbose versions of traditional keyword searches — even those who reported using genAI frequently for a wide variety of tasks. Consumers are simply not aware of the ways AI can help them find the information they want, or how to make that happen. The UI design of current AI tools (not just Gemini) fails to help users overcome the discoverability barrier.

And finding information more quickly and easily isn’t all that useful if the information is incorrect. Although every new AI model promises to decrease hallucinations and performs well in technical tests, in real-world use they still often provide misleading or false information.

As a researcher who has been studying information-seeking for a decade, I’m excited to see how much AI tools can help people find the information they need. But we still have a long way to go in improving the usability, transparency, and reliability of these tools before they achieve their full potential.